Cloud Computing

-Pay for what you need

-Cloud computing is the on-demand delivery of IT resources over the internet with pay-as-you-go pricing

-300 virtual servers or 2000 terabytes of storage

The three cloud computing deployment models are cloud-based, on-premises, and hybrid.

Cloud-based

Run all parts of the application in the cloud.

Migrate existing applications to the cloud.

Design and build new applications in the cloud.

on-premises

On-premises deployment is also known as a private cloud deployment.

In this model, resources are deployed on premises by using virtualization and resource management tools.

For example, you might have applications that run on technology that is fully kept in your on-premises data center. Though this model is much like legacy IT infrastructure, its incorporation of application management and virtualization technologies helps to increase resource utilization.

hybrid

Connect cloud-based resources to on-premises infrastructure.

In a hybrid deployment, cloud-based resources are connected to on-premises infrastructure. You might want to use this approach in a number of situations.

For example, you have legacy applications that are better maintained on premises, or government regulations require your business to keep certain records on premises.

Advantages:

-Cost saving

-Stop spending money to run and maintain data centers

-No need to guess capacity

-Increase speed and agility

Cloud computing service categories:

Saas (Software as a Service)

In this case third party providers host applications and make them available to customers on internet

Examples: Salesforce, Concur

Pass (Platform as a Service)

In this case third party providers hosts application development platforms and tools on its own infrastructure and make them available to customers on internet.

Examples: Google App Engine, AWS Elastic Beanstalk

Iaas (Infrastructure as a Service)

In this case third party providers host servers, storage and other virtual resources and make them available to customers on internet.

EC2

When you’re working with AWS, those servers are virtual and service you use to gain access to virtual servers is called Ec2. EC2 runs on top of physical host machines managed by AWS using virtualization technology. When you spin up an EC2 instance, you aren’t necessarily taking an entire host to yourself. Instead, you are sharing the host with multiple other instances, otherwise known as virtual machines. When you provision an EC2 instance, you can choose the operating system based on either Windows or Linux. You can provision thousands of EC2 instances on demand

Advantage

-Cost effective

-highly flexible

-Quick

-You can easily stop or terminate the EC2 instances

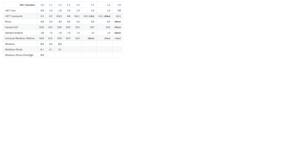

Different types of EC2 instances

The different instance families in EC2 are general purpose, compute optimized, memory optimized, accelerated computing, and storage optimized.

General purpose instances provide a good balance of compute, memory, and networking resources, and can be used for a variety of diverse workloads like web service or code repositories.

application servers

gaming servers

backend servers for enterprise applications

small and medium databases

Compute optimized instances are ideal for compute-intensive tasks like gaming servers, high performance computing or HPC, and even scientific modeling.

memory optimized instances are good for memory-intensive tasks. Accelerated computing are good for floating point number calculations, graphics processing, or data pattern matching, as they use hardware accelerators.

Storage optimized instances are designed for workloads that require high, sequential read and write access to large datasets on local storage. Examples of workloads suitable for storage optimized instances include distributed file systems, data warehousing applications, and high-frequency online transaction processing (OLTP) systems.